THE COMING AGE OF AUGMENTATION

Mel Exon

03/10/2009

As in thrall as we may be to the firehose of new stuff drenching us in the here and now, occasionally we want to look a little further over the horizon. Two thoughts collided in the collective Labs brain a short while ago. By ‘collided’ we mean we saw a consequence of the relationship between the two that made us sit up and think:

1. The mass socialization of technology. 300 million + Facebook users can’t be wrong. We’re still in awe of how mainstream the adoption of technology has become and just how networked the world is. Increasingly the ‘loop’ never seems to close.

2. How ill-equipped we are to cope with the deluge. Natural human processing power is sadly finite and struggling to cope. Certainly, we know we’re not alone in adopting coping strategies like continuous partial attention and ignoring much beyond tomorrow or next week. Steve Rubel at Edelman also has written extensively on the attention crash and its relevance for marketers.

The heady mix of excitement and uneasy tension brought about by these two things has felt irresolvable and on an accelerating curve. Sure, we can help speed our path through the data with better micro tools (”there’s an app for that…”) but they invariably lead us to consume more, faster; giving us the sense that we’re simply accelerating to the point where our brains implode are placed under too much stress. We’re not wannabe priestesses and priests of Zen around here, but is there a longer term, more profound step change to be made where technology actually enables a more balanced life?

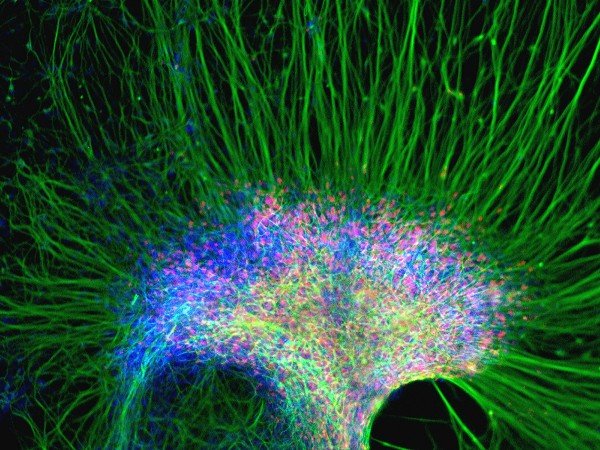

An answer began to emerge when we read a thought-provoking piece in the NYT by John Markoff subtitled “Artificial Intelligence Regains Its Allure.” AI. Cybernetics. Nanotechnology. Post Humanism? Sounds eccentric, but stay with us. Markoff’s assertion that a groundswell of attention and respect has been building around AI, in particular around an idea dubbed the Technological Singularity, made us curious. In a sentence, the idea is that once we create an an artificial intelligence greater than our own, it follows that any resulting ‘Superbrain’ will be capable of augmenting itself extremely quickly to become even more intelligent and so on, leading to an explosive growth in intelligence that is (literally) beyond our imagination.

The surge in interest around the Singularity has led one of its most earnest proponents, Ray Kurzweil, to produce a film about it, due for release in early 2010.

Whether this turns out to be science fiction or science reality, we figure it’s time we paid more attention. As the futurist Alvin Toffler puts it: “It’s not only OK to think about the future but, the more you do, the more ideas you’ll have about the present.” Back to that in a bit.

Our interest has a lot to do with timing. The idea that machines might become self-aware and/or that humans might dramatically augment their intelligence is not new, the simple difference is that it may happen well within our lifetime. Indeed, the shrinking time frame was the thing that grabbed our attention first.

“How soon could such an intelligent robot be built? The coming advances in computing power seem to make it possible by 2030. And once an intelligent robot exists, it is only a small step to a robot species - to an intelligent robot that can make evolved copies of itself.”

Bill Joy, Why the Future Doesn’t need Us, Wired, April 2000

“We have a lot of debates about that [when a computer is expected to pass the Turing test and demonstrate intelligence equal to a human's]…some people at Google say not for 100 years, but I’m more optimistic. I’d give it 20 years. Partly due to the improvements in technology. And partly” - he laughs - “to the decline of humans.”

Sergey Brin, Wired interview, August 2009

Inevitably, the questions start to pile up at this point. Given this topic can be the territory of “futurist-nutjobs” (thanks @brainpicker for the technical term), we’ve fileted the background reading we found most useful into a separate Labs post here, which you’re welcome to check out if you want to delve deeper without the full, sand-blast-your-neural-cells experience we endured to bring this to you. We’ve included how the Singularity may come about, examples of companies from start-ups to Google taking it seriously, Kurzweil’s logarithmic graph that depicts the pattern of exponential change leading to the Singularity, what the sceptics say and what the alternate scenarios may be.

Rewind to 2009. Whether or not everyone can agree upon what a Technological Singularity is, let alone whether or not it will occur, most seem to agree on this: we are already transitioning to an era when we will need to develop new cognitive habits, our brains augmented, aided and abetted by technology to achieve this: an Age of Augmentation, if you will.

We particularly like Jamais Cascio’s thinking here. He describes a technological evolution focused upon how we manage and adapt to the immense amount of knowledge we’ve created, what scientists term “fluid intelligence” - “the ability to find meaning in confusion and to solve new problems, independent of acquired knowledge:”

“Fluid intelligence doesn’t look much like the capacity to memorize and recite facts, the skills that people have traditionally associated with brainpower. But building it up may improve the capacity to think deeply that Carr and others fear we’re losing for good. And we shouldn’t let the stresses associated with a transition to a new era blind us to that era’s astonishing potential. We swim in an ocean of data, accessible from nearly anywhere, generated by billions of devices. We’re only beginning to explore what we can do with this knowledge-at-a-touch…

…Strengthening our fluid intelligence is the only viable approach to navigating the age of constant connectivity.”

Looking further out again, a Technological Singularity provokes some extraordinary questions vexing scientists far smarter than we are. Whether wholly artificially created or partially human, the debate centres around how to ensure a super intelligence uses its intellect for good, not evil. The prevailing wisdom as brought to us by Hollywood in the Terminator films, A.I. and I, Robot suggests a super intelligence will, de facto, leave the human race behind or destroy us “for our own good”. Putting Hollywood to one side, one argument rather chillingly asserts that a super intelligence would be immortal, have no need to procreate and hence have no evolutionary need for love….. Certainly a killer question must be: how can a super intelligence be created that has a capacity for altruism? In other words “a friendly artificial intelligence” (Eliezer S Yudkowsky, the Singularity Institute)?

Back to the present. Where does this leave us personally and with the brands we work with? (Prepare ourselves to Cyborg-it up in order to cope with the data glut, or pack up and run to the hills?) What role can or should a brand play in either scenario? What should we be thinking about now to prepare for the future that might be closer than we think?

We’re still pondering, but our early thoughts below:

Join the debate: in an increasingly networked world, everyone can & will have an opinion about the role of technology in their lives. Brutally, it’s likely there will be considerable angst along the way. Brands can help people navigate the practical and philosophical questions, provide tangible tools to help. They can also invest appropriately. (’Technological Responsibility’ will become the new corporate buzz word).

In an AI world, more and more low level tasks will be automated, completed by robots. Certain choices will become commoditised or outsourced: the typical example - repeat purchase items / FMCGs ordered by your fridge software or managed entirely by your food retailer. Brands will need to think about upgrading the importance/value/cost of what they offer to qualify for human attention & decision making in the first place.

Tech augmentation of your product - let your mind run free. How would you integrate healthcare tech usefully into a razor or cereal packet? How can your product take low level decisions helpfully away from your consumer? Direct Debit - the first electronic example of this, but what else?

Counter-culture: for now at least, there’s room for brands to be marketed as tools to help Neo-Luddites swim against the tech tide. Guinness, Magners, KitKat - ought to be creating virtual & real walled gardens for when you want to kick back and relax, away from the torrent of data.

What’s your starting point? Are you part of the hive, a contributor to Google’s all powerful ‘collective intelligence’? Or spokesperson for the outlier, the individual?

Decide which side you’re on: are you trying to humanise technology or add technology to humans?

However it all turns out, it strikes us we need to stay curious and lean into this, not lean back. As Chris Anderson put it recently:

“This was one of those freaky moments when the future sneaks up and smacks you….Technology wants to be invented and we are almost powerless to stop it. We are hard-wired to create the future, be it good or bad. Invention is its own master.”

Please let us know what you think and check out our other post ‘I Think, Therefore I am (a Self-aware, Superhuman Cyborg) for more on this.